Parsing large XML files can be pain in PHP and most languages. Simon Hamp recently did a talk where he said he was using XMLReader for this purpose. Unfortunately, XMLReader suffers from the same problems that old XML parsers I used in 2001/2 had, in that they are not nice to code with. The problem with pull-parsers like XMLReader is method of programming is to get an event on each opening, closing tag, text section etc..

SimpleXML is much nicer to use but requires too much memory for large XML files. But, what if you could get the best feature of XMLReader (handing large files) with the best features of SimpleXML (readable code)?

So it turns out you can do this and I'm not the first, either. Many large XML files will simply be a large number of records, so if we assume for this example, each record is in a <item> tag, we can iterate through these and then export each record to SimpleXML for easy coding:

$reader = new XMLReader();

$reader->open($path_to_xml_file);

while($reader->read())

{

if($reader->nodeType == XMLReader::ELEMENT && $reader->name == 'item')

{

$doc = new DOMDocument('1.0', 'UTF-8');

$xml = simplexml_import_dom($doc->importNode($reader->expand(),true));

echo $xml->title; //or whatever

}

}

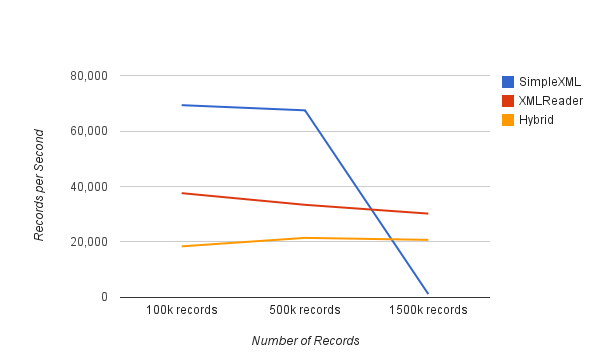

So what about performance? I created XML file 108Mb in size with 100,000 records and tried various methods, another with 500k records and one with 1500k records. Here is a graph of the number of records processed per second:

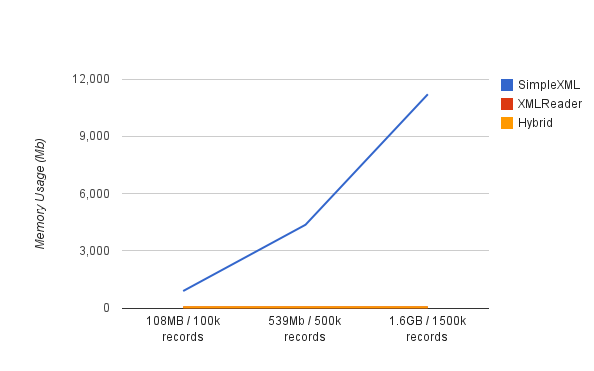

You can see the large slow down of SimpleXML which is caused by the large amount of swap the process was using. Measuring memory can be tricky, because PHP memory_get_peak_usage() function shows the same usage for each method, even though the PHP process baloons in size with SimpleXML, though it does mean your process doesn't hit the memory limit either. So instead I'm using the 'time' command line utility to measure the PHP processes' memory usage. XMLReader and my modified 'hybrid' method both use 33MB regardless of data size. SimpleXML uses 900MB just for the 108Mb/100k record file and it gets a lot worse as you can see:

A final word of warning, if you put the XML file in big string it will store the file in memory, as you might expect, which uses a fair amount of memory. As a result you should not use the xml() function of XMLReader to read content from a string but always use the open() function to read from the file or URL directly.

You can find the little benchmark I wrote for this on github.